Introduction

In today's digital world, security has become an essential and non-negotiable aspect of software development. Integrating security into every phase of the Software Development Life Cycle (SDLC) is crucial to ensure that vulnerabilities are identified and addressed early, preventing potential breaches and attacks in the future.

Why Security Must Be Integrated in Every Stage of SDLC

Software development involves multiple stages, such as: write, test, package, provision, deploy, and monitoring. During each phase, there are unique opportunities to incorporate security practices that can help mitigate risks. When security is neglected at any stage, it can create gaps or weaknesses in the system that attackers can exploit, which could result in severe consequences, such as data breaches, loss of customer trust, and financial damage.

For example, during the planning phase, it is important to identify security requirements alongside functionality and performance requirements. This sets the stage for a secure architecture. In the design phase, threat modeling should be conducted to anticipate potential security threats. During development, developers should adhere to secure coding standards, while in the testing phase, penetration testing and security vulnerability assessments should be performed to uncover potential flaws.

Neglecting security until the final stages of development or, worse, after the software has been deployed, is a common but dangerous mistake. By the time a security flaw is discovered in the later stages, the cost of fixing the problem escalates, and the software might already be exposed to malicious actors.

The Cost of Ignoring Security

Ignoring security during the development lifecycle can have disastrous outcomes. For instance, financial losses can occur due to direct damages from a cyberattack, such as ransomware or data breaches. Furthermore, the damage to a company's reputation can be long-lasting, as customers and partners may lose confidence in a business that has experienced a security incident. In some cases, organizations could face legal actions and regulatory fines, especially if they failed to adhere to industry-specific security standards or data protection laws like GDPR.

Thus, security should never be an afterthought or treated as an additional layer that can be added at the end of the process. It must be treated as an integral component of the entire development workflow, ensuring that security is addressed proactively, continuously, and comprehensively throughout the SDLC.

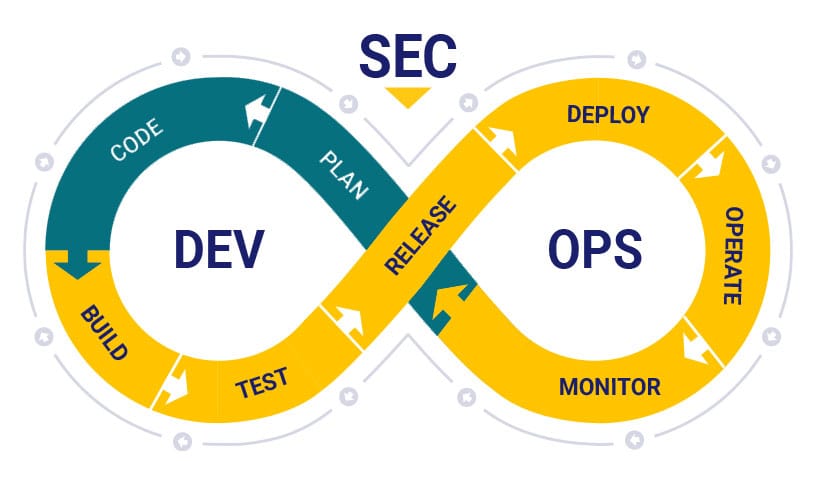

The Role of DevSecOps

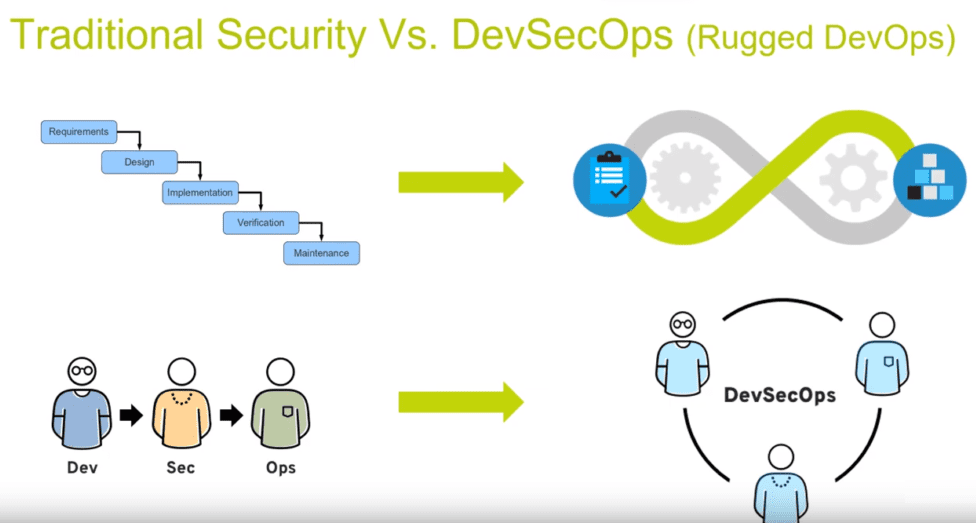

DevSecOps represents a paradigm shift that emphasizes the importance of security in the DevOps workflow. DevOps traditionally focuses on improving the speed and collaboration between development and operations teams. DevSecOps builds upon this by embedding security into the DevOps process from the outset. This means that security is not the sole responsibility of a security team but is a shared responsibility across all team members involved in development, operations, and testing.

In a DevSecOps culture, security tools and practices are integrated into continuous integration/continuous deployment (CI/CD) pipelines. This ensures that code is scanned for vulnerabilities early in the development process, and any security issues are detected and addressed before they make it to production. Automation plays a significant role here, with security checks becoming an integral part of the build and deployment processes, making security a seamless part of the workflow.

Furthermore, DevSecOps encourages collaboration and communication among all team members, from developers and operations teams to security professionals. This integrated approach ensures that security is not treated as a separate function but as part of the ongoing process of developing and deploying software.

Minimizing Risks and Ensuring Secure Software

The primary goal of incorporating security into every phase of the SDLC, especially with a DevSecOps approach, is to minimize risks and ensure that the software is robust enough to withstand current and future threats. By addressing security from the beginning, teams can mitigate common vulnerabilities such as SQL injection, cross-site scripting (XSS), and other attack vectors that often lead to breaches.

Ultimately, the software development process must prioritize security as an essential component. By using DevSecOps principles, organizations can deliver secure, reliable software that is capable of standing up to the evolving landscape of cybersecurity threats, protecting both users and businesses in the process.

In conclusion, security in software development should never be an afterthought. A proactive, integrated approach like DevSecOps ensures that security is embedded in every stage of the SDLC, helping organizations reduce risks and deliver secure, reliable software solutions.

Development Stage (Write)

In the "Write" stage of the software development process, enhancing security can be achieved through the implementation of Static Code Analysis and Linting techniques. These methods automatically analyze source code and provide warnings if vulnerabilities, credential leaks, or other security recommendations are detected.

By using tools such as SonarQube and Checkmarx, developers can identify potential security issues early in the development process, before the code is integrated or deployed. SonarQube, for example, performs static code analysis to identify issues like SQL injection, Cross-Site Scripting (XSS), and other security flaws. Meanwhile, Checkmarx offers in-depth, contextual analysis, helping teams pinpoint and fix weaknesses that might have been overlooked during manual testing.

Tools Used

SonarQube

SonarQube is a widely-used tool that continuously inspects the quality of code and security vulnerabilities. It helps developers identify problems like code duplication, lack of documentation, and security risks such as SQL injection and XSS. By integrating SonarQube into the build process, developers can receive real-time feedback on potential security flaws as they write their code. This helps prevent vulnerabilities from progressing to later stages of development and ensures code quality and security.

Checkmarx

Checkmarx is a powerful static application security testing (SAST) tool that goes beyond just identifying vulnerabilities. It performs a comprehensive analysis of source code, considering the application context to detect and help resolve vulnerabilities that could be exploited by attackers. Checkmarx supports both open-source and custom code, offering detailed insights into security risks and providing guidance on how to fix them. It assists development teams in adopting a proactive approach to security by identifying problems early in the coding phase.

Why Static Code Analysis and Linting Matter

Static code analysis and linting are vital tools for improving software security. These practices help automate the detection of vulnerabilities in the source code that may not be visible in functional or manual testing. Static code analysis looks for patterns and issues that could lead to security vulnerabilities, such as unvalidated inputs, unsafe data handling, or improper use of encryption. Linting, on the other hand, ensures that code adheres to predefined coding standards, which helps prevent errors and inconsistencies that could introduce security risks.

Integrating Security Early in the Development Process

The advantage of using tools like SonarQube and Checkmarx during the "Write" stage is that they allow developers to address security issues early, rather than waiting until later stages of the SDLC. By identifying vulnerabilities at this point, developers can prevent them from being passed down the pipeline and incorporated into production code, reducing the risk of security breaches.

Testing Stage

In the "Test" stage of software development, security should be a top priority to ensure that the application functions securely and meets the established security requirements. Several important steps need to be taken, including verifying access control for each role within the application, ensuring proper authentication processes, and ensuring the use of features like password masking to protect sensitive information.

Tools like Robot Framework enable automated testing of various security aspects, including authentication testing and role-based access control (RBAC). Additionally, Manual Testing remains essential to evaluate specific scenarios that automated tests might not cover. Manual testing is also crucial for verifying aspects like user input security and the system’s response to potential attacks.

Tools Used

Robot Framework

Robot Framework is an open-source automation framework used for testing a wide range of applications, including security-related tests. It supports a variety of test libraries and tools, including those for security testing. With Robot Framework, developers can automate testing for authentication mechanisms, role-based access controls, and other security-critical functions. Automated tests ensure that security vulnerabilities are identified early and that any deviations from security requirements are flagged, improving the security posture of the application.

Manual Testing

While automated tools are extremely useful, Manual Testing is still essential, especially for security. Human testers can simulate complex attack scenarios, such as SQL injections, cross-site scripting (XSS), and cross-site request forgery (CSRF), which might not be fully addressed by automated testing. Manual testing is especially important for evaluating edge cases, system responses to malicious user input, and overall resilience against attack vectors that may evolve over time. It also allows testers to explore user workflows in depth, ensuring that no critical vulnerabilities are overlooked.

Why Security Must Be Prioritized in Testing

The testing phase is where the security of an application is thoroughly evaluated. Since this is the stage where the software is subjected to real-world attack simulations, ensuring that security vulnerabilities are caught early is essential for protecting both the application and its users. During this phase, security checks should focus on:

- Authentication and Authorization: Ensuring that only authorized users have access to sensitive data or actions. Role-based access control (RBAC) needs to be rigorously tested to verify that permissions are correctly assigned and enforced.

- Input Validation: Verifying that user inputs are validated and sanitized properly to avoid common vulnerabilities like SQL injection or XSS, where an attacker could inject malicious scripts or commands into the system.

- Session Management: Ensuring that session tokens are securely generated, managed, and expired appropriately to prevent session hijacking or fixation attacks.

- Password Protection: Using techniques like password masking to ensure that sensitive information, such as user passwords, is securely handled and stored.

Automated vs. Manual Security Testing

Automated testing tools, such as Robot Framework, excel at running repetitive and large-scale security tests, such as authentication workflows, access rights, and penetration testing. They can help ensure that basic security mechanisms are functioning correctly across different configurations. However, no automated system can entirely replace human intuition and creativity, especially when it comes to simulating real-world hacking attempts and complex attack strategies. Manual testing is required to uncover those vulnerabilities that automated tests might miss, such as human errors in access control logic or unexpected interactions between components.

Packaging Stage

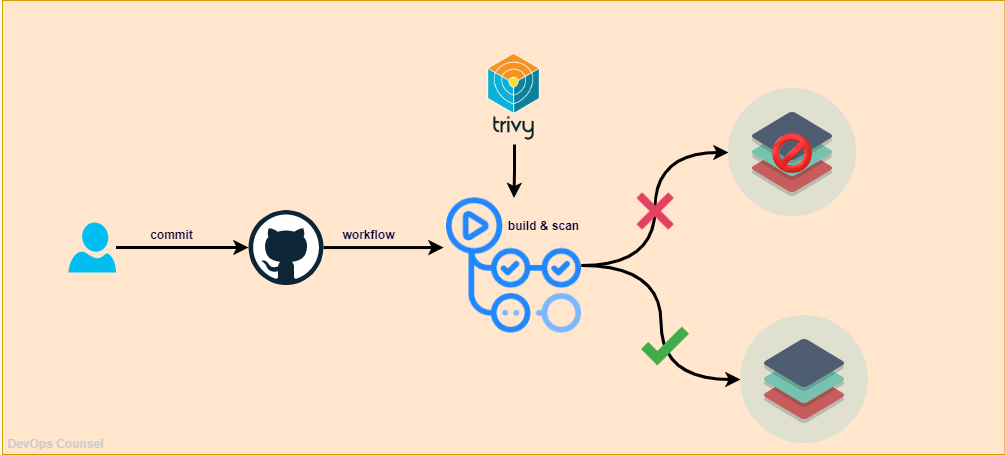

In the "Packaging" stage of software development, security is tightly controlled through various measures that ensure the Docker image produced is free from security vulnerabilities. One of the key steps in this process is scanning the Docker image to detect potential vulnerabilities in the base image and any software installed within it. By thoroughly scanning Docker images using tools like Trivy Image Scanner, development teams can ensure that only secure, vulnerability-free images are allowed to be used in production environments.

In addition, to minimize the attack surface, it is highly recommended to use Distroless or Alpine Linux as the base image. These images are smaller in size and contain fewer components that could potentially have security holes. Moreover, using a non-root user within the Docker container is also strongly advised to limit access rights and reduce the risk in case of an exploit.

Security of the Docker image storage is also crucial. Docker images should be stored following strict security standards and encrypted to protect data from unauthorized access. Image signing is also implemented to maintain the integrity of the Docker image, ensuring that the image used in production is legitimate and has not been tampered with by unauthorized parties. Tools like Harbor and Quay are used to manage the secure storage and distribution of images.

Tools Used

Alpine Linux or Distroless as Base Images

Using smaller base images like Alpine Linux or Distroless offers several security advantages. These images are minimalistic, containing only the essential software needed for the application to run. This reduction in size and components directly reduces the number of potential vulnerabilities in the container, minimizing the attack surface. The fewer the components, the less there is to maintain and secure. Additionally, Distroless images do not contain package managers, shells, or other debugging tools, further reducing the potential for security issues.

Trivy by AquaSec for Image Scanning

Trivy is a comprehensive open-source security scanner for Docker images. It scans Docker images for vulnerabilities in operating system packages, application dependencies, and any other software included in the image. By using Trivy, developers can identify vulnerabilities early in the packaging process, ensuring that only secure images are deployed. This scanning process also includes checks for known CVEs (Common Vulnerabilities and Exposures) and unpatched security issues that could be exploited by attackers.

Harbor/Quay for Image Registry

Harbor and Quay are secure Docker image registries used to store and manage Docker images. These platforms provide features like role-based access control (RBAC), image vulnerability scanning, and support for image signing, ensuring that only verified, secure images are distributed to production environments. Harbor, for example, allows for image signing and scanning, which helps maintain integrity and security throughout the CI/CD pipeline. Quay also provides similar features, ensuring secure and reliable storage and access for Docker images.

Cosign for Image Binary Signing

Cosign is a tool used to sign Docker images and container binaries, ensuring that the images used in production have not been tampered with. By signing images, development teams can verify the authenticity and integrity of the Docker images before they are deployed. This prevents the risk of malicious modifications or attacks during the image build and storage process.

Why Security Must Be Focused on During Packaging

The packaging stage is critical because it involves creating the final, deployable version of the software. The Docker image represents the application and its dependencies, and any security vulnerabilities within the image could directly impact the security of the running application. Therefore, security controls such as image scanning, signing, and the use of minimal base images are crucial to ensure that only trusted, secure software is packaged and deployed.

Furthermore, minimizing the attack surface by using smaller, more secure base images, like Alpine Linux or Distroless, reduces the number of potential entry points for attackers. Adopting security practices such as running containers as non-root users and applying image signing further strengthens the security of the packaged software by preventing unauthorized access and ensuring the integrity of the images.

Provisioning Stage

In the process of Infrastructure Provisioning and Infrastructure Configuration, the approach of Infrastructure as Code (IaC) is employed to ensure that the infrastructure built exhibits characteristics such as immutability, accountability, and full automation. With IaC, infrastructure management becomes more structured and documented, allowing for easy tracking and auditing of any changes made to the infrastructure.

Tools such as Terraform or Pulumi enable the automatic provisioning of infrastructure based on code definitions, ensuring that each infrastructure environment created is consistent and reproducible. This supports the concept of immutability, where changes to the infrastructure are not made by directly modifying the existing infrastructure, but instead by replacing components with new ones based on updated code.

Additionally, Ansible is used for automatic configuration of the infrastructure, ensuring that each server or infrastructure component is configured correctly according to predefined standards. With IaC, this configuration process becomes more reliable and reproducible, reducing the potential for manual errors and ensuring that the infrastructure is always in the desired state.

Tools Used

Terraform/Pulumi for Infrastructure Automation

Terraform and Pulumi are powerful tools for provisioning infrastructure automatically using code. Both tools allow developers to define and manage infrastructure through configuration files, which describe the desired state of the infrastructure. Terraform uses a declarative language to define infrastructure and automatically creates the necessary resources, while Pulumi allows developers to use general-purpose programming languages (like TypeScript, Python, or Go) to manage cloud resources. These tools ensure that the provisioning process is consistent across environments, making it easier to manage complex infrastructure setups.

By leveraging these tools, teams can create, update, and delete resources with minimal human intervention, ensuring that the infrastructure is consistent, scalable, and easy to replicate. This approach aligns with the principles of immutability, where infrastructure changes are always made by replacing rather than modifying resources, ensuring that new configurations are reliably deployed.

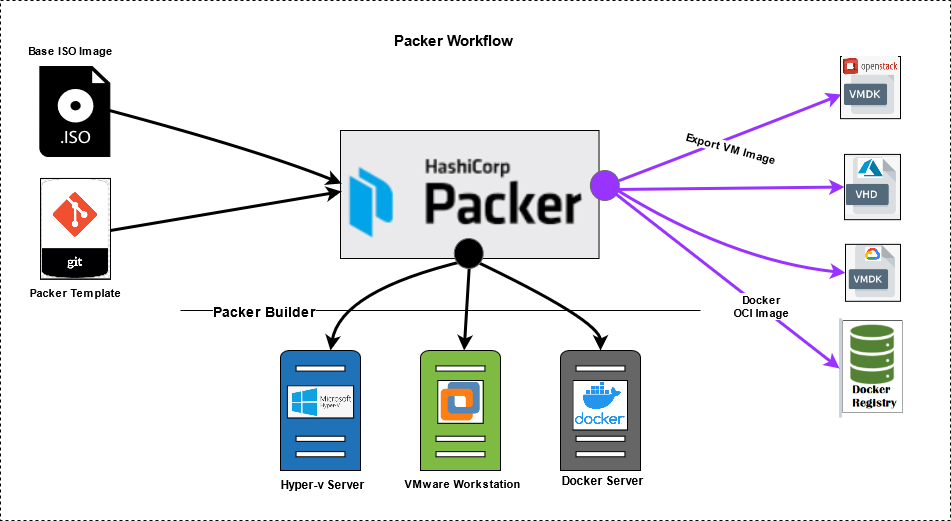

Packer for Image Automation

Packer is an open-source tool used to automate the creation of machine images. By automating image generation, Packer ensures that server images are created with the latest software updates and security patches. Packer is often integrated with tools like Terraform to create reusable and consistent base images for servers or virtual machines, which are then used in various environments such as development, staging, or production. Using Packer helps standardize the environment setup and speeds up the provisioning process, reducing the chances of configuration drift.

Packer works seamlessly with cloud platforms like AWS, Azure, and Google Cloud, making it an ideal tool for creating consistent, secure, and reusable images across multiple cloud environments. This further improves the overall security and stability of the infrastructure by ensuring that images used in production are always up to date and built according to defined standards.

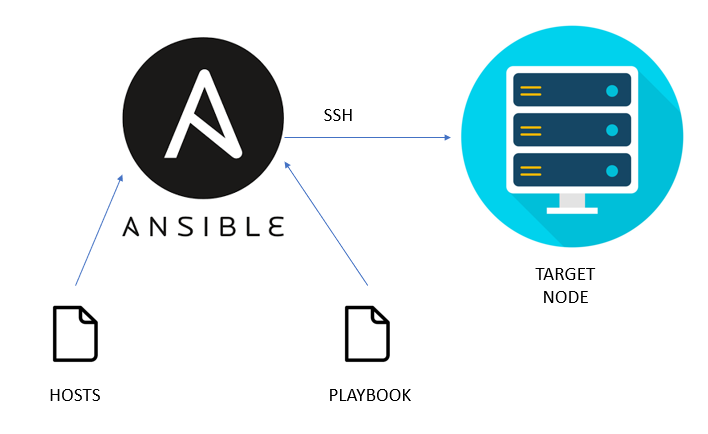

Ansible for Server Configuration Management

Ansible is a tool that automates server configuration management and application deployment. It is widely used to configure servers, ensure that systems are correctly set up, and apply configuration changes consistently across multiple servers. With Ansible, teams can define infrastructure configurations using YAML playbooks, which describe the desired state of a server or infrastructure component.

In the provisioning stage, Ansible plays a critical role in ensuring that the environment is configured according to the defined standards. Whether it's installing software, setting up security policies, or managing user accounts, Ansible automates these processes, ensuring that configurations are reproducible and free from human error. This helps avoid configuration drift and ensures that infrastructure is always in the expected state, contributing to more secure and stable environments.

Why Infrastructure as Code (IaC) Matters in Provisioning

The use of IaC tools like Terraform, Pulumi, Packer, and Ansible ensures that infrastructure is not only provisioned automatically but also consistently and securely. By treating infrastructure as code, all configurations are versioned, stored, and managed in source control systems, providing transparency and traceability for every change made to the infrastructure.

IaC also ensures that the infrastructure can be easily replicated or scaled, reducing manual intervention and the risk of human error. The concept of immutability, where resources are replaced rather than altered, ensures that environments are predictable and stable. Additionally, IaC facilitates quick recovery from failures by enabling the rapid redeployment of infrastructure using predefined code.

The Benefits of IaC in Provisioning:

- Automation: Infrastructure provisioning becomes fully automated, reducing the need for manual intervention and increasing efficiency.

- Consistency: Using code to define infrastructure ensures that environments are consistently created, avoiding configuration drift between development, staging, and production.

- Accountability and Auditing: Since every change is tracked in version control systems, organizations can easily audit changes and maintain accountability for any adjustments made to the infrastructure.

- Immutability: Infrastructure changes are made by replacing components rather than modifying them, reducing the risk of errors or unintended consequences.

- Scalability: IaC tools allow infrastructure to scale easily by creating or tearing down resources on demand, ensuring that the infrastructure can grow or shrink according to business needs.

Deployment Stage

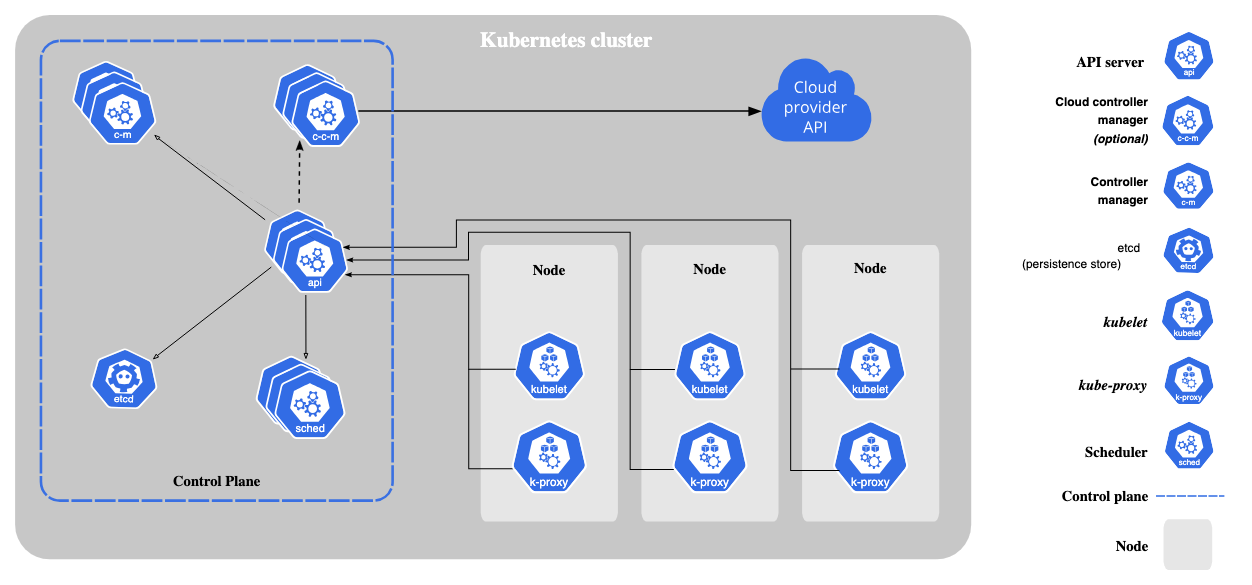

In managing the Server and Application Lifecycle in a production environment, Kubernetes serves as the primary platform. The security of applications deployed in Kubernetes can be controlled by ensuring that deployments adhere to security standards defined by Kubernetes. This includes configuring Security Contexts, which govern the access rights and permissions of pods and containers, as well as setting resource limits to prevent applications from consuming more resources than permitted.

Additionally, extra security measures can be applied through strict deployment policies. For example, only signed and verified Docker images can be deployed, and only stable versions of Docker images are allowed in production environments. This ensures that applications running on Kubernetes are using tested and secure versions, and prevents the use of unauthenticated or potentially compromised images.

To further strengthen the security of communication between services within the Kubernetes cluster, a Service Mesh such as Consul or Istio can be used. A Service Mesh adds an extra layer of security by managing communication between services through encryption and strict authentication, ensuring that data transmitted between services is protected from interception or other types of attacks.

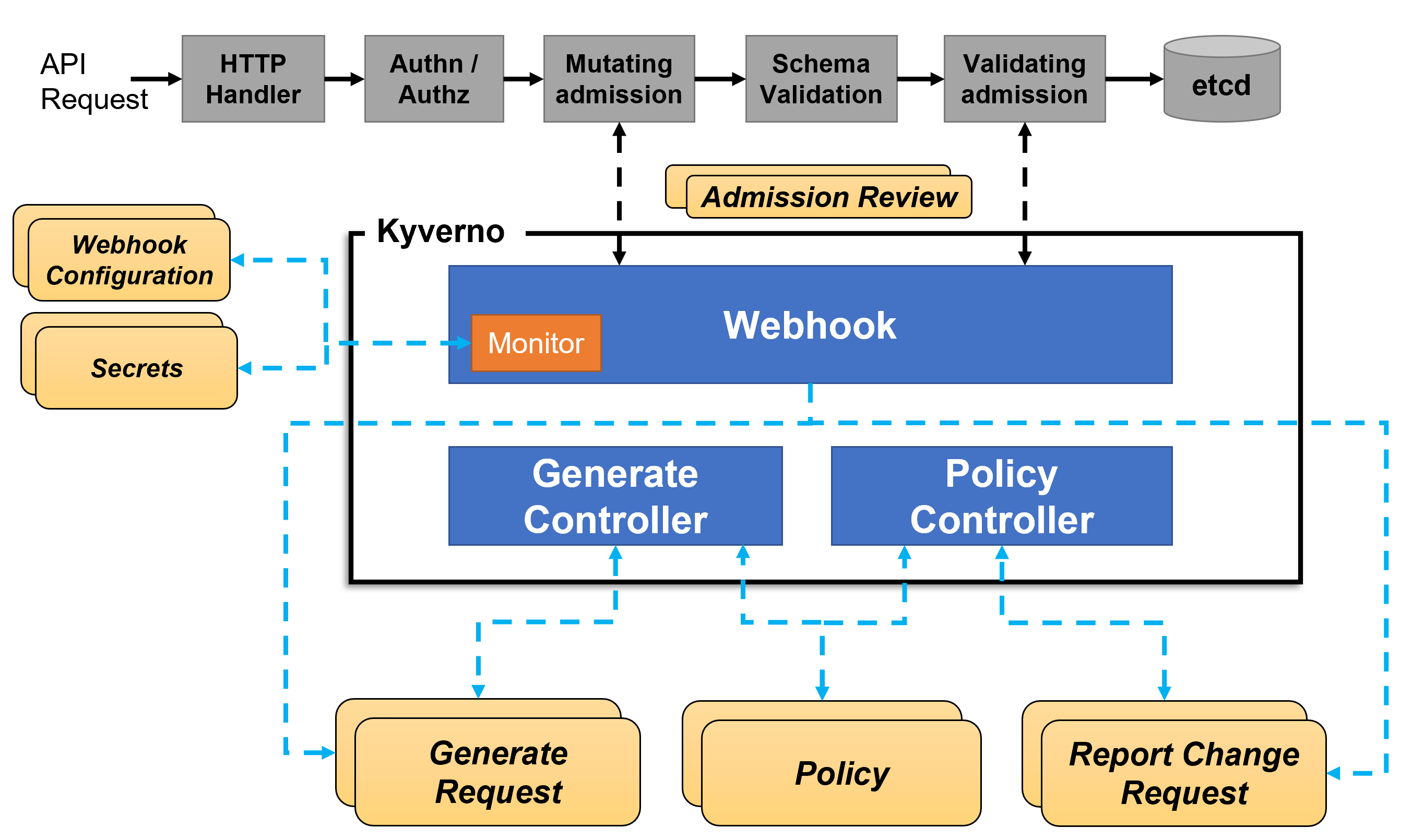

Tools like Kyverno (Policy Engine) are used to enforce security policies across the Kubernetes cluster, while Sigstore is used for image signing, ensuring the integrity and authentication of Docker images deployed in the environment.

Tools Used

Kubernetes for Application Deployment

Kubernetes is a powerful container orchestration platform that automates the deployment, scaling, and management of containerized applications. In a production environment, Kubernetes ensures that applications are deployed in a highly available, fault-tolerant manner. It provides features such as automatic scaling, self-healing, and rollbacks, making it ideal for managing applications in production. However, to maintain security, Kubernetes must be configured correctly to control access and permissions to resources within the cluster.

Kyverno for Policy Enforcement

Kyverno is a policy engine designed for Kubernetes that helps enforce security policies across the cluster. It allows administrators to define and enforce best practices for security, such as ensuring only signed images are deployed, restricting the use of privileged containers, and enforcing resource quotas. Kyverno can automatically validate and enforce policies on resource creation, updates, and deletions, ensuring that deployments comply with the established security standards.

Sigstore for Image Signing

Sigstore is a tool that provides image signing to verify the integrity and authenticity of Docker images. By signing images before they are deployed to Kubernetes, Sigstore ensures that the images have not been tampered with and are coming from a trusted source. This process guarantees that only verified images are deployed in production, reducing the risk of deploying compromised or untrusted code.

Service Mesh (Consul or Istio) for Secure Service Communication

A Service Mesh like Consul or Istio provides a secure way to manage service-to-service communication within Kubernetes clusters. It ensures that communications between services are encrypted and authenticated, preventing data interception and man-in-the-middle attacks. Service Meshes provide fine-grained control over traffic, security, and policies governing the communication between services. They also help in ensuring that only trusted services communicate with one another, and offer features like service discovery, load balancing, and tracing.

Why Security Is Critical During Deployment

The deployment stage is critical for ensuring that the application is not only functional but also secure in a production environment. Once an application is deployed, it becomes accessible to users, and any vulnerabilities can lead to significant security risks. Therefore, security must be embedded into the deployment process to prevent issues such as unauthorized access, resource abuse, and insecure communication between services.

Key security practices for deployment include:

- Configuring Security Contexts: Ensuring that containers and pods have the least privileges required to perform their tasks. This minimizes the risk of attackers gaining elevated privileges if they compromise a pod.

- Enforcing Image Integrity: By using image signing and only allowing verified, signed images, teams can ensure that only trusted code runs in the cluster, reducing the risk of deploying compromised images.

- Implementing Service Mesh: By using tools like Consul or Istio, communication between services is encrypted and authenticated, protecting data in transit and preventing man-in-the-middle attacks or unauthorized access.

The Role of Policies and Automation in Security

To manage the complexity of securing large Kubernetes clusters, enforcing security policies is critical. Tools like Kyverno automate the enforcement of security rules across the cluster, ensuring consistency and compliance without manual intervention. For example, policies can automatically prevent the deployment of containers that run as root, enforce limits on CPU and memory usage, and check for the presence of security patches.

Additionally, automating image signing and validation through tools like Sigstore ensures that only trusted, verified images are used in the deployment process, eliminating human error and preventing malicious or unapproved changes from being deployed to production.

Monitoring Stage

Once the application is live, the primary focus shifts to maintaining its performance and security to ensure it runs smoothly and is protected from potential threats. Achieving this requires the use of comprehensive monitoring tools and techniques, such as logging, Application Performance Management (APM), and Request Tracing. These techniques provide deep visibility into the health of the application, helping teams proactively identify and resolve issues before they affect users.

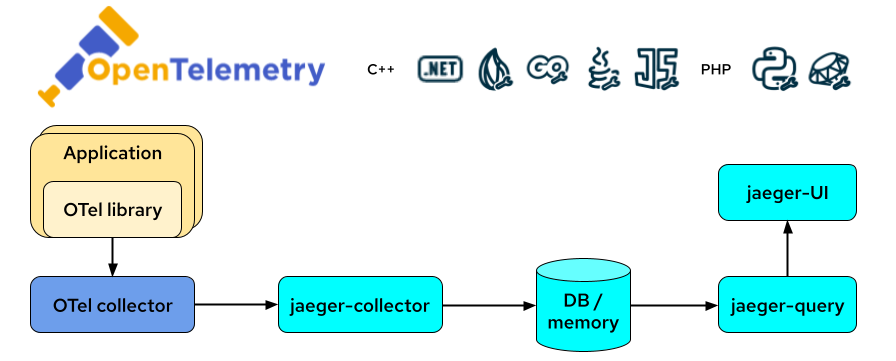

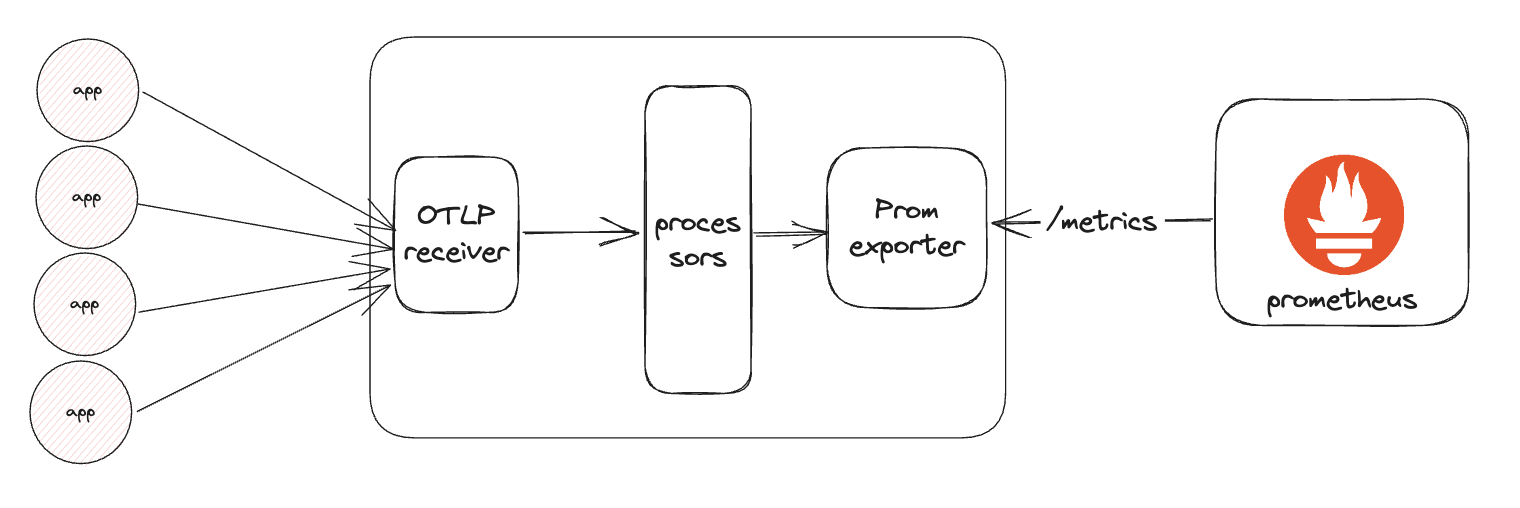

OpenTelemetry serves as the core observability platform during this stage, enabling the collection and analysis of data from various sources to provide a comprehensive view of application performance. Jaeger and Prometheus are also critical tools used for tracing and monitoring performance metrics, offering insights necessary to monitor the application in real-time and identify bottlenecks or other issues.

To enhance security further, it is recommended to use Cyber Threat Management platforms like OpenCTI, which provide real-time alerts if there are indications of attacks on the system. This allows teams to respond quickly and prevent further damage. Additionally, Penetration Testing should be performed regularly to test the system's resilience to attacks and ensure that no security vulnerabilities are overlooked.

Tools Used

OpenTelemetry for Observability

OpenTelemetry is an open-source platform for collecting, processing, and exporting telemetry data (logs, metrics, and traces) from applications. It offers a unified set of APIs, libraries, agents, and instrumentation to ensure comprehensive observability across different systems and components. With OpenTelemetry, teams can gain detailed insights into the health and performance of applications, allowing for better decision-making and quicker issue resolution. By consolidating data into a single platform, it enables real-time monitoring, logging, and traceability, ensuring that teams have all the information they need to maintain the application's performance and security.

Jaeger for Tracing

Jaeger is an open-source distributed tracing system that helps monitor and troubleshoot applications in production. It tracks requests as they move through various microservices, helping to pinpoint latency issues, identify bottlenecks, and visualize the flow of requests. Jaeger integrates seamlessly with OpenTelemetry, providing detailed trace data that helps teams understand how different components interact within the system. This tool is especially valuable for monitoring complex, microservices-based architectures, allowing for faster detection of performance degradation and system failures.

Prometheus and Grafana for Metrics and Dashboards

Prometheus is an open-source system monitoring and alerting toolkit that collects metrics from configured endpoints. It stores these metrics in a time-series database, making it easier to query and analyze them over time. Grafana, which integrates with Prometheus, is used to create interactive dashboards that visualize the metrics in real-time, offering an easy-to-understand representation of the system's health. Together, Prometheus and Grafana provide a powerful combination for monitoring performance, setting up alerts, and detecting anomalies in real-time, helping teams to address potential problems before they escalate.

OpenCTI for Cyber Threat Intelligence

OpenCTI (Open Cyber Threat Intelligence) is a platform for managing and sharing cyber threat intelligence. It collects, stores, and analyzes data about potential cyber threats and attacks. By integrating with the broader security monitoring ecosystem, OpenCTI provides real-time alerts when suspicious activity or threats are detected, enabling teams to react swiftly. This proactive approach to threat management ensures that potential attacks are identified and mitigated before they cause harm to the system.

Why Monitoring and Security are Critical

The Monitoring stage is essential because it allows teams to maintain a continuous watch over application performance and security. Issues that go undetected can affect user experience, degrade performance, and potentially expose the application to security risks. By integrating observability tools like OpenTelemetry, Jaeger, and Prometheus, development and operations teams gain the ability to detect and diagnose performance issues quickly, ensuring that the application runs efficiently and securely.

In addition to performance monitoring, security should be a major focus during the monitoring stage. Cyber Threat Management platforms like OpenCTI allow teams to stay informed about potential threats in real-time, enabling them to act swiftly if an attack is detected. Furthermore, regular Penetration Testing ensures that security vulnerabilities are identified before attackers can exploit them.

Benefits of Comprehensive Monitoring:

- Real-Time Performance Monitoring: Tools like Jaeger and Prometheus provide real-time visibility into application health, helping to detect and resolve issues before they impact end-users.

- Proactive Issue Resolution: With OpenTelemetry and its integrations, teams can proactively identify issues, minimizing downtime and enhancing the user experience.

- Enhanced Security: Tools like OpenCTI provide timely alerts about potential threats, allowing teams to respond swiftly and prevent data breaches or other security incidents.

- Continuous Improvement: With access to performance and security data, teams can continuously improve the system, optimizing both its functionality and security posture.

- Regulatory Compliance: Continuous monitoring ensures that applications remain compliant with industry regulations, as it provides the necessary audit trails and logs required for compliance.

Summary

In conclusion, integrating security into every phase of the development lifecycle through DevSecOps is no longer a luxury but a necessity. By adopting this approach, organizations can enhance their security posture, accelerate software delivery, and foster a culture of collaboration across development, security, and operations teams. As cyber threats become increasingly sophisticated, DevSecOps offers a robust solution for proactively identifying and addressing vulnerabilities, ensuring that security is not an afterthought but an integral part of the development process. Embracing DevSecOps is not just about safeguarding data and applications—it’s about building trust, ensuring compliance, and empowering organizations to thrive in an ever-evolving digital landscape.